LOAD DATA (Files Load)

The LOAD DATA command allows you to write raw data into Indexima tables.

Syntax

Load Data from local disk

LOAD DATA [LOCAL]

INPATH '<protocol>://<absolute_path_to_data>'

INTO TABLE <table_name>

FORMAT CSV|ORC|PARQUET|JSON

SEPARATOR ',|;|\t'

[SKIP <number_of_row_to_skip> ]

[LIMIT <limit_number>]

[PATTERN <file_pattern>]

[DPATTERN <folder_pattern>]

[PREFIX <prefix>]

[SUFFIX <suffix>]

[DATE_FORMAT <date_format>]

[TIMESTAMP_FORMAT <timestamp_format>]

[LOCALE <locale_identifier>]

[NOCHECK]Parameters

| Parameter name | Description | Possible values |

|---|---|---|

LOCAL | If used, this keyword tells the Master node to read data from his own filesystem. | Empty | LOCAL |

protocol | The type of datalake you want to load data from. | s3 | adl | gs | hdfs |

| absolute_path_to_data | The absolute path to a folder containing all the files you want to load data from. If LOCAL is specified, only the Master node needs to have read access to this path. | Any absolute path is readable by the Indexima nodes. |

| table_name | The name of the Indexima table you want to load the data into. | The exact name of the Indexima table you want to load data into. The structure of the files must match the structure of the table. |

| number_of_row_to_skip | The number of rows to skip before starting the load. This is used to skip the first or the 2 first rows, which are usually header rows. | Any positive number. |

| limit_number | Maximum Number of rows that will be read from each file. | Any positive number. |

| file_pattern | Only files beginning with the defined pattern would be loaded. Must end with a star. | |

| folder_pattern | load all files contained in <absolute_path_to_data>: Use "*" (star) as the wilcard and "/" for subfolder

| |

| prefix | see below in "Load partitions from Hive table". | |

| Suffix | supports only CSV format, same concept as prefix applied for missing column at the end of each line. | |

| DATE_FORMAT | Specify a format to parse the dates in input file. | |

| TIMESTAMP_FORMAT | Specify a format to parse the timestamps in input file. Eg: TIMESTAMP_FORMAT 'dd/MM/yyyy HH|mm|ss' The pattern must comply to Java DateTimeFormatter (https://docs.oracle.com/javase/8/docs/api/java/time/format/DateTimeFormatter.html) | |

| LOCALE | The local identifier used to parse the input file. This identifier describe the expected format for decimal fields. Eg: LOCALE 'fr' | |

| NoCheck | Without this option, INDEXIMA checks if loaded values are compatible with the datatype set when creating the table. INDEXIMA triggers errors when datatypes do not match. |

Load Example

LOAD DATA

INPATH 'mypath'

INTO TABLE myschema.mytable

FORMAT CSV

SEPARATOR ','

SKIP 1

LIMIT 10

PATTERN 'Mypattern*'

PREFIX 'myprefix'

DATE_FORMAT 'dd_MM_yyyy'

TIMESTAMP_FORMAT 'dd/MM/yyyy HH|mm|ss'

NOCHECKLoad with comma as decimal separator in input file

lang-en.csv

1;10.05;hello

lang-fr.csv

2;12,98;salut

Commands

CREATE SCHEMA tmp;

CREATE TABLE tmp.t1(i int, d double, s string, main index idx_0(i));

LOAD DATA IN PATH '/home/csv/lang-en.csv' into table tmp.t1 format CSV SEPARATOR ';' LOCALE 'en';

LOAD DATA IN PATH '/home/csv/lang-fr.csv' into table tmp.t1 format CSV SEPARATOR ';' LOCALE 'fr';

COMMIT tmp.t1;

SELECT * FROM tmp.t1;

Result

d s

10.05 hello

12.98 salut

Load partitions from Hive table

If you want to load a specified set of partitions and not all partitions from a Hive table at once, then provide a path of the root partition and add PREFIX 'column_values' to specify the values for the partition columns.

LOAD DATA INPATH 'hdfs://data_node:8020/apps/hive/warehouse/my_imported_data_orc/year=2018/month=12/day=10'

INTO TABLE my_test

FORMAT orc

PREFIX '2018,12,10,'If the Hive table is in tab-separated format, the separator must be \t so the statement looks like PREFIX '2018\t12\t10\t'.

Load Example with a suffix

Add SUFFIX 'column_values' to specify the values that will finish the CSV line when there is fewer columns than expected (defined by the list of columns in the table creation).

LOAD DATA INPATH 'hdfs://data_node:8020/apps/hive/warehouse/myORCtable'

INTO TABLE my_test

FORMAT CSV

SUFFIX 'XX,YY'Load Example with DPATTERN

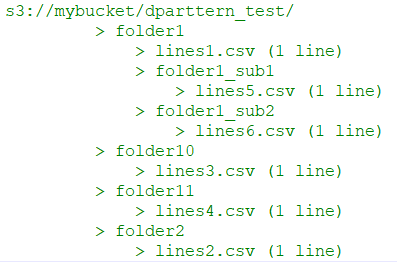

Consider the following tree view

Here is a list of examples with the expected behavior.

-- All files within s3a://mybucket/dparttern_test/ --> Insert 6 lines

LOAD DATA INPATH 's3a://mybucket/dparttern_test/' INTO TABLE tmp_sco.tbl1 FORMAT CSV SEPARATOR ',' DPATTERN '**';

--All files within any subfolders with a name beginning with folder1_sub --> Insert 2 lines

LOAD DATA INPATH 's3a://mybucket/dparttern_test/' INTO TABLE tmp_sco.tbl1 FORMAT CSV SEPARATOR ',' DPATTERN '**/folder1_sub*';

--All files within the any subfolders with a name beginning with folder1_sub AND files names beginning with lines --> Insert 2 lines

LOAD DATA INPATH 's3a://mybucket/dparttern_test/' INTO TABLE tmp_sco.tbl1 FORMAT CSV SEPARATOR ',' DPATTERN '**/folder1_sub*' PATTERN 'lines*';

--All files within any folders with THE name folder1 AND files names beginning with lines --> Insert 3 lines

LOAD DATA INPATH 's3a://mybucket/dparttern_test/' INTO TABLE tmp_sco.tbl1 FORMAT CSV SEPARATOR ',' DPATTERN 'folder1/**' PATTERN 'lines*';

--All files within any folders with a name beginning with folder1 AND files names beginning with lines --> Insert 5 lines

LOAD DATA INPATH 's3a://mybucket/dparttern_test/' INTO TABLE tmp_sco.tbl1 FORMAT CSV SEPARATOR ',' DPATTERN 'folder1*/**' PATTERN 'lines*';

--All files within any subfolders with a name containing with 'sub' --> Insert 2 lines

LOAD DATA INPATH 's3a://mybucket/dparttern_test/' INTO TABLE tmp_sco.tbl1 FORMAT CSV SEPARATOR ',' DPATTERN '**/*sub*';