When the Index is too big

Context

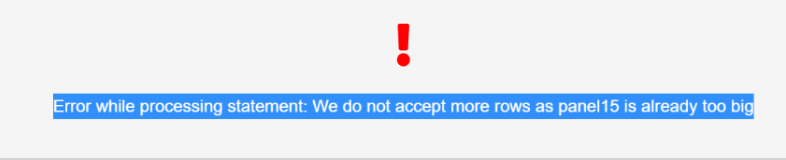

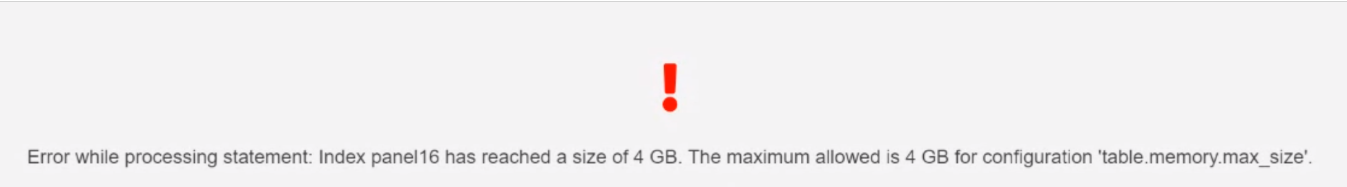

When loading indexes or large tables, you may encounter one of the following error messages:

- We do not accept more rows as <index_name> is already too big.

- Index <index_name> has reached a size of 4GB. The maximum allowed ...

Reasons for the error message

You can use two parameters to prevent the machine from running out of memory when using large tables or Hyperindexes.

index.memory.max_size.mb which defines the maximum size (Mb) of an index per node.

- table.memory.max_size.mb which defines the maximum size (Mb) of a dataspace per node.

Best Practices to prevent error messages

1. Check Hyperindexes definition.

When an HyperIndex is too big, it may be that the cardinality of some fields is too high. You may consider excluding those fields when defining your index.

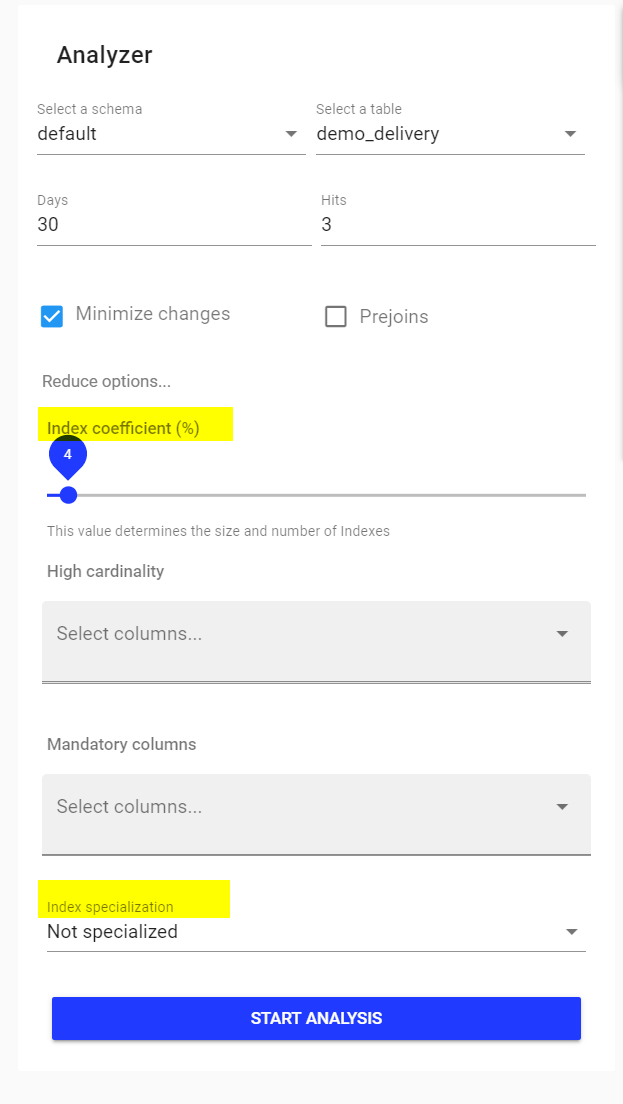

2. Run an analysis with no default parameters.

- In Index Coefficient:

You can the index coefficient, so the analyzer can suggest a smaller index.

- In Index Specialisation:

You may have to change from the Not Specialized default value to Half or Fully Specialized.

3. Change configuration limits.

Increase index.memory.max_size

SET_ index.memory.max_size.mb = <value>;Note that the default value of index.memory.max_size.mb is 1024 (Mb).

Increase table.memory.max_size

SET_ table.memory.max_size.mb = <value>;Note that the default value of table.memory.max_size.mb is 4096 (Mb).